Foresight

- 30 Oct 23

-

2527

2527

-

Humanity and the Al-Created World: Are We Ready?

Key Takeaways:

- The use of AI to create content in the online world is growing, and it comes with challenges such as disinformation, Deepfakes, and hallucinated facts.

- Currently, there is a significant amount of inappropriate content generated using AI. Moreover, it has broad-reaching impacts, such as using AI to create images of explosions near buildings of the United States Department of Defense, generating fake CEO voices to deceive money transfers, and creating Deepfakes of the Ukrainian president conceding in the Russia-Ukraine war.

- All sectors, including government, private sector, media, news agencies, and content creators, as well as the general public, should collaborate to build a world where AI-generated content is ethical, informed, and mindful. This involves fostering ethics, awareness, understanding of usage, and consciousness of the potential consequences that may arise from its application.

Currently, the use of Artificial Intelligence (AI) technology is rapidly growing. We are seeing many people talk about Generative AI, which is designed to have the ability to 'generate anew' from existing datasets using Generative Model algorithms. Examples include ChatGPT, MidJourney, and DALL-E, which have been used as tools to create a significant amount of content.

However, this growth also comes with challenges. Currently, there is a substantial amount of inappropriate content being generated. There is an increase in fake news, false information, inaccuracies, and partial truths (Disinformation). Content is being fabricated to alter the characteristics of various individuals through media such as videos, photos, and recorded voices (Deepfakes), as well as truths being created without evidence or supporting data (Hallucinated Facts).

The question is, as our world becomes filled with various things created by AI, including content and systems that utilize AI, are we prepared? How will this impact our lives? And how should we handle it?

Thinking before sharing – in an age where AI can generate content so convincingly!

In an era of prolific AI utilization, we must reconsider whether the content we commonly encounter is truthful. What is the essence of truth? It's essential not to believe everything we see at face value. Currently, it's almost impossible to distinguish whether the various content we come across online is crafted by humans or AI.

In a study that employed AI to create false text on Twitter, a sample group attempted to determine which tweets were false. The research found that people were 3% less likely to spot false tweets generated by AI than those written by humans. In this experiment, the large-scale language model GPT-3 was notably proficient at generating convincing falsehoods, even more so than humans themselves. This could be attributed to GPT-3's text often having a more structured format than human-written text, yet condensed for easier processing. While the overall distinction might be slight, it remains concerning due to the potential growth of AI-disseminated false information in the future.

Researchers suggest that AI-disseminated false information is not only faster and more accurate but also more efficient than human-generated falsehoods. Furthermore, they anticipate that if the research were to be repeated using the latest large-scale language model from OpenAI, GPT-4, the differences would be even more significant due to GPT-4's heightened capabilities.

According to The Influencer Marketing Factory's 2023 Creator Economy Report, as much as 94.5% of content creators in the United States admit to utilizing AI in content creation, primarily for content augmentation and the generation of images or videos. While AI's integration is beneficial in streamlining work processes and reducing content creation time, over-reliance without proper scrutiny may result in unforeseen and broad-reaching negative consequences. Additionally, excessive dependency on AI could potentially suppress human creativity and hinder the exploration of new ideas.

Moreover, NewsGuard, a company that evaluates the credibility of online news sources, identified 49 websites that predominantly appeared to employ AI-generated content in April 2023 alone. Some websites were producing almost all of their content using AI, and in some cases, generating hundreds of articles per day. Prominent tools like ChatGPT raise concerns about the quality and transparency of content, as they often prioritize quantity over quality. This leads to the production of subpar content being disseminated online. The closer AI-generated content becomes to human-generated content, the easier it is to distribute inaccurate information on the internet. If users do not evaluate content quality before sharing or if there are individuals intentionally spreading content with ill intentions, the consequences could be significantly impactful.

We must acknowledge that the information found on the internet contains a mix of true data, opinions that may be biased, distorted information, or outright falsehoods. Most people tend to believe that computers provide accurate answers when processing information for us. However, if the input lacks quality, the output will also reflect the same (Garbage in; Garbage Out).

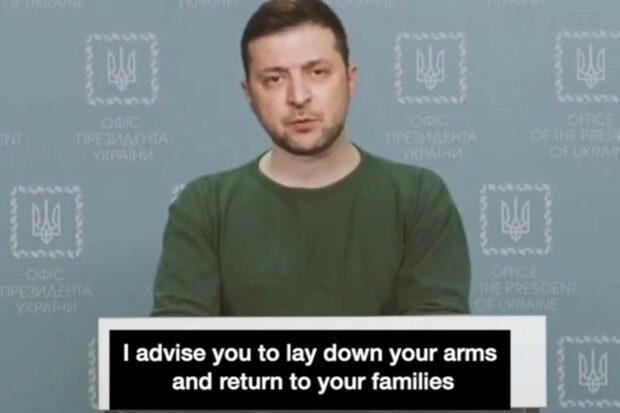

Image by PPTVHD36

In May of the past year, an image resembling an explosion near the United States Department of Defense Building or the Pentagon went viral on Twitter. Prominent media outlets like Russia Today also shared this image. However, later on, the United States Department of Defense confirmed that the image was generated by AI. This caused significant alarm among the public, to the extent that the U.S. stock market temporarily plunged.

Image by ThaiPublica

In the context of the conflict between Ukraine and Russia, there have been instances of using such fake news to attack the opposing side. In the early months of the past year, there was a Deepfake video of Volodymyr Zelenskyy, the President of Ukraine, declaring surrender in the war. This technology, which involves using AI to manipulate various aspects of an individual's appearance and voice through images, videos, and sound, has been employed for such purposes. For instance, taking the face of one person and superimposing it onto another to convey various statements in a seamless video. In situations like the ongoing conflict, this could potentially mislead and deceive people. During the years 2019-2020, the amount of Deepfake content online increased by up to 900%.

Similarly, in an incident from 2019, the CEO of a British energy company believed he was on the phone with his boss, the chief executive of the firm’s German parent company, who urgently requested him to send $243,000 to a supposed supplier in Hungary within an hour. However, it turned out to be a voice imitation by a malicious actor using AI.

We can observe that creating content using AI indeed has its benefits, such as enhancing efficiency and capabilities in content creation, fostering creativity and innovation, and saving time and costs in the content production process. However, there are concerns about accuracy and credibility as AI-generated content might lead to misinterpretations or the dissemination of false information. As AI replaces human labor, the content generated by AI could force content creators and the media industry to adapt in terms of competition and the improvement of the quality of the generated content.

What will the future environment of online information look like?

Experts from the European Union Agency for Law Enforcement Cooperation (Europol) predict that over 90% of online content could be generated by AI by 2026. This rise in AI-generated content is expected to lead to an increase in information manipulation and will have far-reaching impacts, including legal considerations and numerous challenges we must face in a world where content is predominantly AI-generated.

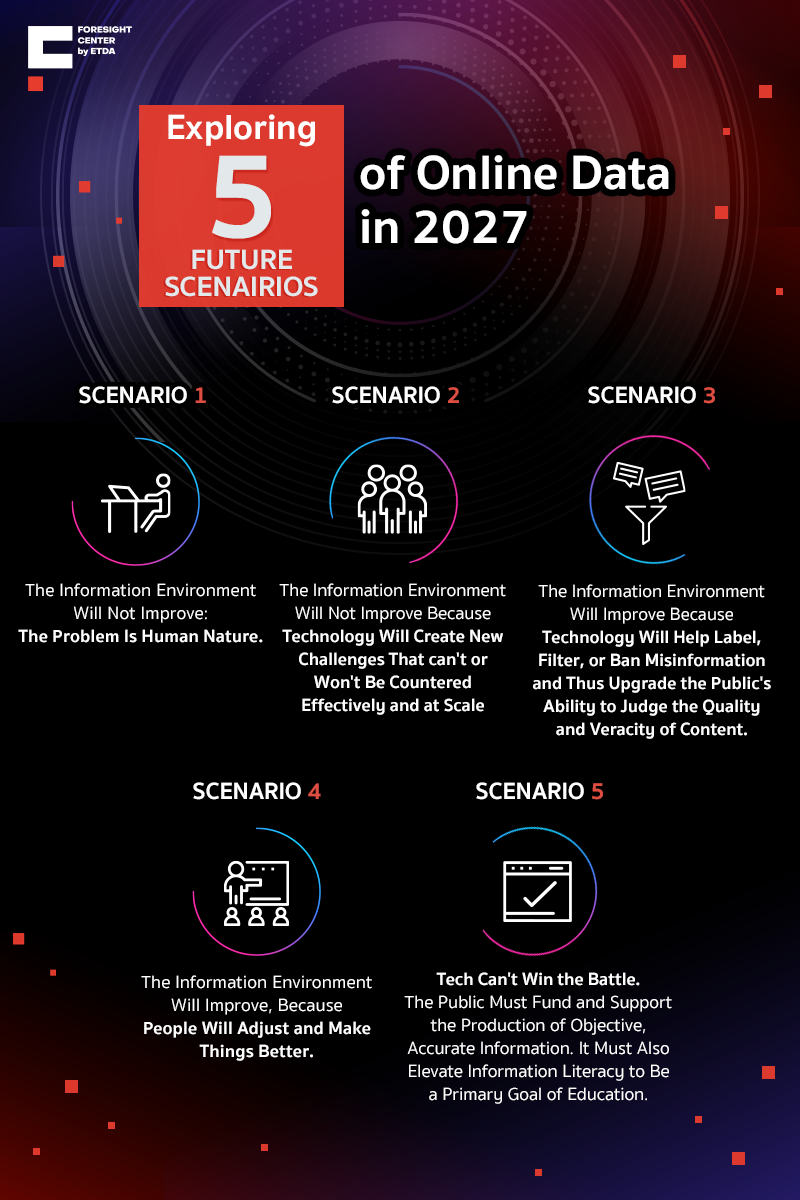

Let's take a look at the future predictions for the environment of online information, as presented by Pew Research Center and Elon University's Imagining the Internet Center. It's worth exploring how the landscape of online information might change in the future. The crucial question is whether humans like us will be able to cope with it. AI has been discussed in various contexts, making these predictions intriguing. These future forecasts have been in the spotlight since 2017, speculating about what the situation might be like in another 10 years or by the year 2027. They have categorized potential scenarios into three formats, encompassing five scenes as follows

1. The information environment will not improve.

Scenario 1: The information environment will not improve due to human nature.

- Humans and AI can instantly create and distribute distorted information due to the continuous growth and rapid development of the internet and technology.

- Humans tend to believe and engage with content that resembles themselves or what they are familiar with, as they naturally seek to belong and prefer convenience.

- In the current economic, political, and social systems, those in power and capable government leaders benefit the most when the information environment is chaotic.

- Due to human nature and the problem of information overload, people are divided or conflicting, making it difficult to reach consensus on shared knowledge. As trusted news sources decline, there is an increased likelihood of misinformation from less reliable sources, leading to confusion.

- Some individuals in society can access and pay for reliable information sources, while others struggle to access trustworthy information, leading to digital divides and increased digital divide.

Scenario 2: The information environment will not improve due to new technological challenges, and humans are not efficiently equipped to handle them.

- Narratives that can be weaponized and other false content are amplified through social media, filtered algorithms, and AI.

- Those who create content for personal gain rather than public interest are likely to thrive and become leaders in the information war.

- Efforts to address inaccuracies in information might result in reduced privacy and limited freedom of expression online

2. The information environment will improve.

Scenario 3: The information environment will improve as technology aids in labeling, filtering, and disallowing the spread of false information, empowering the public to assess content quality and veracity.

- Technology assists in algorithmic filtering, browser extensions, applications, plugins, and trust ratings for content.

- Legal reforms may include software liability laws, user identification requirements for transparency and online security, and regulations on social media networks like Facebook

Scenario 4: The information environment will improve as humans adapt and enhance various aspects.

- False information still exists, but humans find ways to mitigate its impact and manage it better as they become more skilled at discerning content.

- Crowdsourcing will work to high light verified facts and block those who propagate lies and propaganda. Some also have hopes for distributed ledgers (blockchain)

3. Major programs are necessary.

Scenario 5: Tech can't win the battle. The public must fund and support the production of objective, accurate information. It must also elevate information literacy to be a primary goal of education.

- Funding and support should focus on resilient, trustworthy public media.

- Increasing online information literacy should be a primary goal at all education levels.

Overall, we will see three scenarios. Firstly, the future might bring an environment where the state of online information doesn't improve. Content creation by humans and AI could escalate rapidly without proper management or correction. Technology might surpass human capabilities, leading to potential risks. Content that can be used as weapons and other false information would be amplified by social media and selective filtering, influenced by AI algorithms catering to personal biases.

On the contrary, the information data environment could improve. Through the development of advanced filtering algorithms, browsers, apps, plugins, and the implementation of trust rating systems, the information data environment could enhance. Misinformation would still exist, yet humans would find ways to minimize its impact. This is due to increasing human expertise in content discernment and the establishment of collaborative projects, funding, support, and education for the online information data environment.

After observing these different scenarios depicting the online information environment, in which scenario would you prefer our country to be?

How to deal with the information environment of online content created by AI?

To address the digital landscape shaped by AI-generated content on the online world, strategies to mitigate risks associated with the growing trend of AI-generated content creation need to be developed and implemented. In this regard, several sectors can play distinct roles:

Government Sector

- Legal Framework and Regulation

The government should establish comprehensive legal frameworks and regulations to address the creation and dissemination of AI-generated content, defining responsibilities for content creators and platforms and setting penalties for violations.

- Policy and Legislation Development

It is essential to develop adaptive policies and laws that keep pace with the changing AI landscape, including guidelines for labeling AI-generated content and mechanisms for content takedown in case of misinformation.

- Research and Development Support

Allocating funds to research on advanced AI technologies for detecting and analyzing AI-generated content and fostering interdisciplinary research involving technology, law, ethics, and social sciences is crucial.

- Platform Responsibility Enforcement

Collaboration with online platforms to implement transparent AI-powered content verification systems and reporting mechanisms for inappropriate or misleading AI-generated content is recommended.

- Education and Training Initiatives

Organizing educational programs to increase awareness among citizens, government employees, and law enforcement about AI-generated content and its implications will be beneficial.

- Public-Private Collaboration

Foster collaboration between the private sector and government to develop tools and guidelines for addressing AI-generated content. This collaboration ensures a comprehensive approach that leverages expertise from both sectors to effectively manage AI content.

Private Sector

- Development of Efficient AI Content Detection Tools

The private sector should actively work on creating effective technologies and tools capable of identifying AI-generated content with high accuracy. This effort should also promote and enhance technological innovations that can verify and scrutinize AI-generated content, including systems designed to prevent the spread of AI-generated misinformation.

- Support for Education and Awareness

Initiatives should be undertaken to provide education, enhance awareness, and impart knowledge about effectively dealing with AI-generated content. This support should extend to all members of the organization, particularly content creators who share information with the public.

- Facilitating Collaboration and Knowledge Sharing

Encourage collaboration and the exchange of knowledge among various stakeholders regarding the challenges and opportunities presented by AI technology, as well as strategies for managing content generated by AI.

- Establishment of Expert Teams

Private sector entities should establish dedicated teams comprising experts who possess specialized skills in content auditing and quality control of AI-generated content. This will ensure that content meets appropriate standards before dissemination.

General Public

- Digital Literacy and Learning

Develop digital literacy and knowledge related to creating content using AI, as well as methods to verify information. Foster additional understanding and explore technologies and strategies for managing AI-generated information through training programs and learning initiatives.

- Awareness of Information Verification

Recognize the importance of verifying information before sharing or trusting it. Prioritize evaluating information for accuracy and credibility before dissemination.

- Reporting Inappropriate Content

Report any inappropriate content encountered, adhering to platform guidelines, if content does not meet the required standards or is found to be false. Engage responsibly in ensuring the quality of information shared.

- Learning Information Verification Methods

Educate oneself about information verification techniques and actively disseminate accurate and ethical information to prevent the distribution of inappropriate information.

Content Creators (Media, News Agencies, Creators)

- Enhanced Responsibility in Content Creation

Increasing awareness among content creators about the potential consequences of AI-generated content dissemination fosters responsible content creation.

- Verification and Credibility

Rigorous fact-checking processes should be implemented before publishing content to maintain accuracy and credibility.

- AI Tool Understanding

Educating content creators about AI tools' capabilities and limitations promotes ethical use and prevents the creation of misleading content.

- Creating Unique and Valuable Content

Encouraging the production of original and valuable content that contributes positively to discourse and cannot be easily replaced by AI-generated alternatives is essential.

Now it's your turn! Let's analyze the next piece of content you see on social media. Do you think they used AI to create it? Where do you think they might have used AI? Do you consider the information trustworthy? Try checking various sources before sharing, especially when it comes to using AI tools for content creation. Consider examining its accuracy and imagine the potential consequences that may follow.

Of course, we might just be creating content without any malicious intent. However, who would have thought that it could be adding false information to the online world unintentionally? Or someone might be using what we post with ill intentions without us knowing. And the outcomes that follow could be more severe than we imagine. Therefore, don't forget to think before you post, think before you believe, and think before you share!